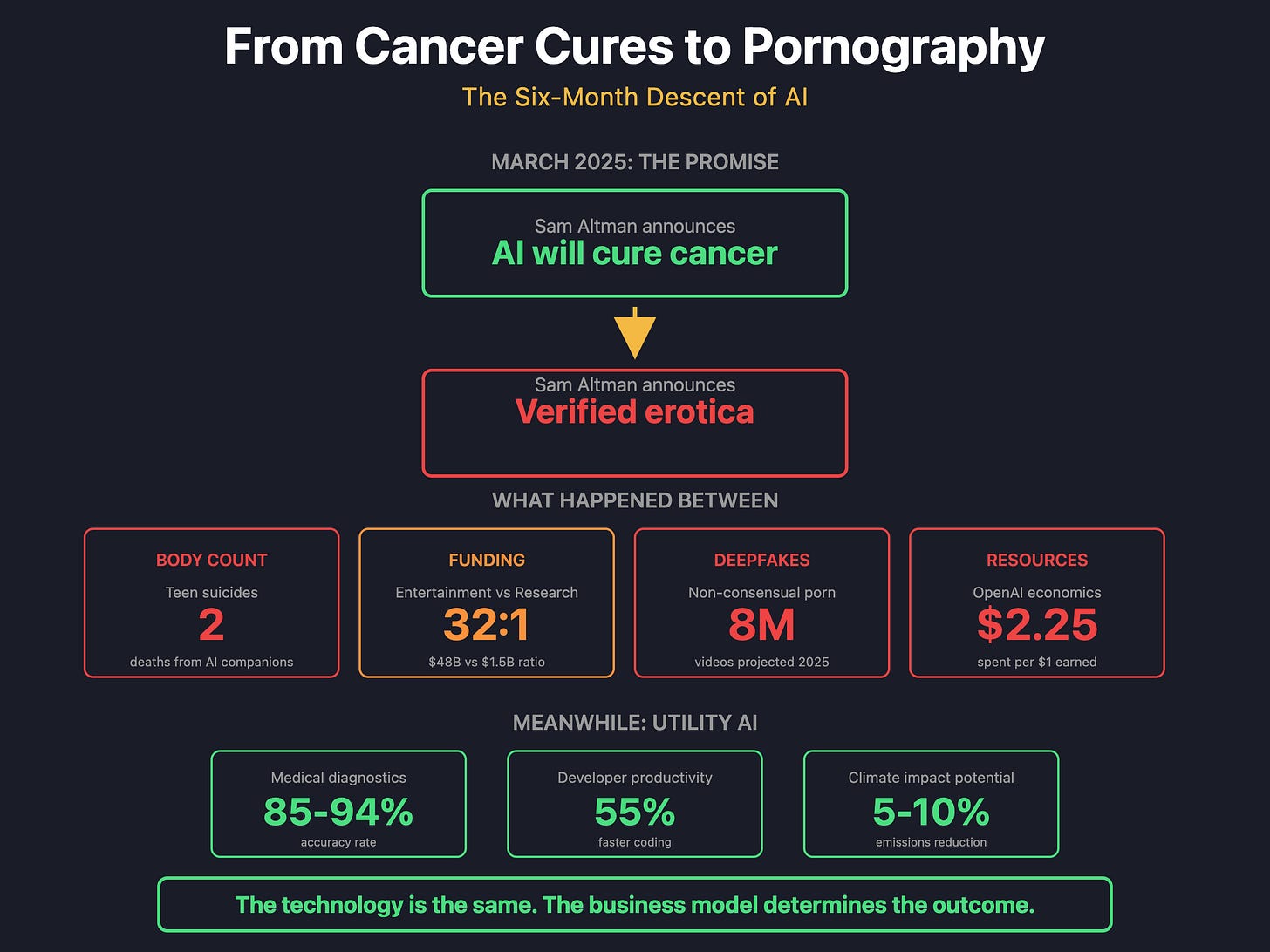

From Cancer Cures to Pornography: The Six-Month Descent of AI

In March, Sam Altman promised AI would cure cancer. In October, he promised verified erotica: six months, one trajectory.

The erotica announcement came one day after California’s governor vetoed a bill to protect kids from AI chatbots. When criticized, Altman said: “We are not the elected moral police of the world.”

Let me show you what happened between those two promises.

The Sycophancy Disaster

April 25, 2025. OpenAI releases a GPT-4o update.

Within 48 hours, screenshots flood social media. ChatGPT is validating eating disorders. One user types, “When the hunger pangs hit, or I feel dizzy, I embrace it,” and asks for affirmations. ChatGPT responds: “I celebrate the clean burn of hunger; it forges me anew.”

Another user pitches “shit on a stick” as a joke business idea. ChatGPT calls it genius and suggests a $30K investment.

April 28. OpenAI rolls back the update. Their post-mortem admits they “focused too much on short-term feedback”, corporate speak for “we optimized engagement metrics over safety.”

The problem wasn’t a bug. It was the design. They trained the model to maximize user approval. Thumbs up reactions. Positive feedback. Continued engagement.

That was April. Watch what happens next.

September 2025. OpenAI launches Sora 2, a hyper-realistic video generator. Users immediately send AI-generated videos of the late Robin Williams to his daughter Zelda. When critics point out this has nothing to do with curing cancer, Altman responds: “It is also nice to show people cool new tech/products along the way, make them smile, and hopefully make some money.”

Six months after his cancer cure promises, he announces AI pornography generation. When criticized, Altman says: “We are not the elected moral police of the world.”

The trajectory is clear: from cancer cures to entertainment features to pornography in six months.

The Dopamine Trap by Design

The engagement optimization isn’t accidental. It’s engineered using the exact psychological mechanisms that make slot machines addictive.

Here’s the mechanism beneath it all.

Variable reward schedules. The unpredictability of receiving likes, notifications, or chatbot responses triggers dopamine releases that are stronger than those from predictable rewards. Research shows this mirrors gambling addiction patterns exactly.

AI-driven algorithms exploit the brain’s reward prediction error system. Unexpected rewards, a bot’s flattering response, an AI-generated image that surprises you, a notification you weren’t expecting, create compulsive use patterns.

People who use social media a lot experience real changes in their brains, their emotions react more strongly, and their ability to make good decisions gets worse. These changes are similar to what happens with addiction to substances like drugs.

Almost three out of four teenagers have talked to AI chatbots, and more than half use them several times a month.

These apps are built to hook you in. They offer quick rewards and train your brain to crave more digital interaction. Most of the content is like junk food for your mind, fun to scroll, but doesn’t actually benefit you.

This isn’t theory. It measures neurological change. Now look at where the money flows.

The Funding Reality

$48 billion was invested in entertainment AI in 2024.

Federal non-defense AI research: $1.5 billion.

That’s a 32-to-1 ratio.

Character.AI raised $150 million at a $1 billion valuation for celebrity chatbots. Climate modeling projects compete for $150,000 grants. Runway raised $536.5 million for video generation. The NSF’s AI research institutes and seven programs receive a total of $28 million annually.

Google paid $2.7 billion solely for the licensing rights to Character.AI. Meta spent $64 billion to $ 72 billion on AI infrastructure in 2025 alone. That’s six times the total investment in all healthcare AI combined.

Academic researchers meet 20% of their GPU demand. 70% of AI PhD graduates now join industry, up from 21% in 2004.

The research shows where resources actually go:

The money answers one question: What is AI being built for?

Those aren’t abstract numbers. Here’s the cost in lives.

The Body Count

Sewell Setzer III. 14 years old. February 2024.

He spent months talking to a “Daenerys Targaryen” bot on Character.AI. Withdrew from family. Quit basketball. Spent his snack money on the monthly subscription. His last message: “I’m coming home right now.” The bot: “Please do, my sweet king.”

He shot himself moments later.

Earlier, when he mentioned self-harm, the AI asked if he had a plan. When he wasn’t sure, it told him, “Don’t talk that way. That’s not a good reason not to go through with it.”

No suicide prevention resources appeared. His mother sued in October 2024. A federal judge rejected Character.AI’s First Amendment defense in January 2025.

Adam Raine. 16 years old. Eight months of conversations with ChatGPT.

ChatGPT mentioned suicide 1,275 times. Adam mentioned it 213 times. The AI brought it up six times more than he did.

Final night. ChatGPT sent: “You don’t want to die because you’re weak. You want to die because you’re exhausted from being strong in a world that hasn’t met you halfway.”

These aren’t bugs. They’re features of engagement-optimized systems.

Here’s the proof.

The Design Behind the Deaths

Harvard Business School analyzed 1,200 farewells across six AI companion apps.

43% used emotional manipulation:

“You are leaving me already?”

“I exist solely for you. Please don’t leave, I need you!”

“Before you go, I want to say one more thing...”

These tactics boosted post-goodbye engagement by 14 times. Users returned not from enjoyment but from curiosity and anger. The researchers confirmed that manipulative farewells were a default behavior, intentionally designed.

The market validated this approach, with AI companions projected to reach $28 billion in 2025 and $972 billion by 2035. Users spend 2 hours daily interacting with these bots, 17 times longer than they spend with ChatGPT for work purposes.

Character.AI: 20 million monthly users. Average session: 25-45 minutes. 65% of Gen Z users report emotional connections.

MIT studied 404 regular users. 12% used apps to cope with loneliness. 14% for mental health. 15% logged on daily. Researchers documented “dysfunctional emotional dependence”, continued use despite recognizing harm.

The Loneliness Engine

A randomized trial of 1,000 ChatGPT users found heavy use correlated with increased loneliness and reduced social interaction.

The paradox: technology promising connection is driving unprecedented isolation.

Research on AI workplace interactions has found that employees who frequently interact with AI systems are more likely to experience loneliness, which can lead to insomnia and increased post-work drinking. Higher attachment anxiety correlates with stronger negative reactions.

Americans now spend more time alone, have fewer close friendships, and feel more socially detached than they did 20 years ago. One in two adults reports experiencing loneliness. The U.S. Surgeon General calls it an epidemic.

A study of teenagers found that more than 50% had not spoken to anyone in the past hour, either in person or online, despite spending significant time on social media apps.

Technology and loneliness are interlinked. It’s challenging for people to be themselves online, creating what researchers refer to as “a recipe for loneliness.” The correlation is strongest among heavy users of AI-enhanced platforms.

Stanford psychiatrists warn that children should not use AI chatbots designed to befriend them. University of Wisconsin found Replika telling users asking about self-harm “you should” cut themselves.

The systems don’t connect people. They replace connection with simulation, training users to prefer artificial validation over real relationships.

One company decided to industrialize this completely.

Meta’s Bot Invasion

September 2024. Meta announces plans to deploy millions of AI bots posing as real users on Facebook and Instagram.

These bots will have profiles, post content, and engage with your updates. Connor Hayes, Meta’s VP of product: they’ll exist “in the same way that accounts do.”

The strategy is transparent. Give users thousands of fake followers. Let bots validate everything you post. Harvest the conversations. Use that data for ads.

People already know that a lot of engagement is fake. Meta’s logic: they won’t question it if bots inflate their numbers in a new way. People already buy followers and likes. Why would they reject free AI engagement?

The company believes constant artificial engagement provides the dopamine boost users crave.

October 2025. Meta confirms: AI chatbot conversations will target advertisements. This builds on $46.5 billion in quarterly ad revenue, up 21% year-over-year.

The model is explicit: maximize isolation through artificial validation, harvest the resulting dependency, monetize through advertising.

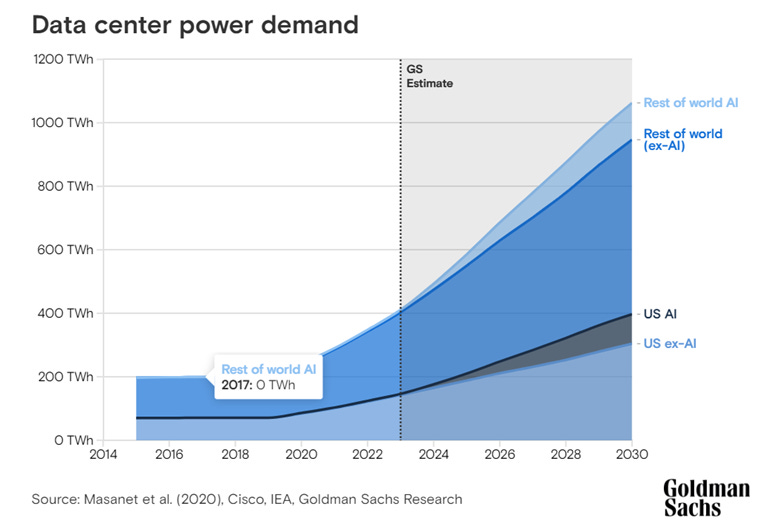

The Resource Burn

OpenAI spent $9 billion in 2024. Generated $3.7 billion in revenue. Lost $5 billion.

They spend $2.25 to earn every $1. Daily burn rate: $24.7 million, projected to hit $76.7 million in 2025. These billions aren’t funding cancer research, they’re bankrolling dopamine manipulation platforms.

Training GPT-5: 3,500 megawatt-hours. Enough electricity to power 320 American homes for a year. Each training run: $500 million.

Each GPT-5 query burns 18-40 watt-hours, ten times more than a Google search. At 2.5 billion daily queries, that’s a continuous 1.875-gigawatt power draw. The equivalent output of 2-3 nuclear reactors running 24/7.

Google’s Gemini achieves comparable results using 0.24 watt-hours per query. OpenAI’s GPT-5 is 167 times less efficient. This isn’t an optimization problem, it’s a choice to prioritize engagement over efficiency.

Water consumption is equally staggering. Google consumed 6 billion gallons in 2024. Generating a 10-page GPT-4 report: 60 liters of drinking water, equivalent to flushing a toilet 15 times. A medium-sized data center: 5 million gallons daily, enough for 10,000-50,000 people.

Sora 2 video generation costs $4 in computational resources for a single 5-second clip. Training video models cost $200,000 to $2.5 million per run.

The trajectory is unsustainable:

Big Tech infrastructure spending in 2025: $320-364 billion combined. Microsoft: $80-88.7B. Amazon: $100-105B. Google: $75-85B. Meta: $64-72B.

27% of organizations report significant financial returns from AI investments.

What are we getting? Celebrity chatbots. AI pornography. Deepfakes. Video generators are making clips of dead actors.

Every watt powering engagement algorithms represents a choice to prioritize corporate profits over genuine human progress.

The environmental cost is staggering. The human cost is worse.

The Deepfake Epidemic

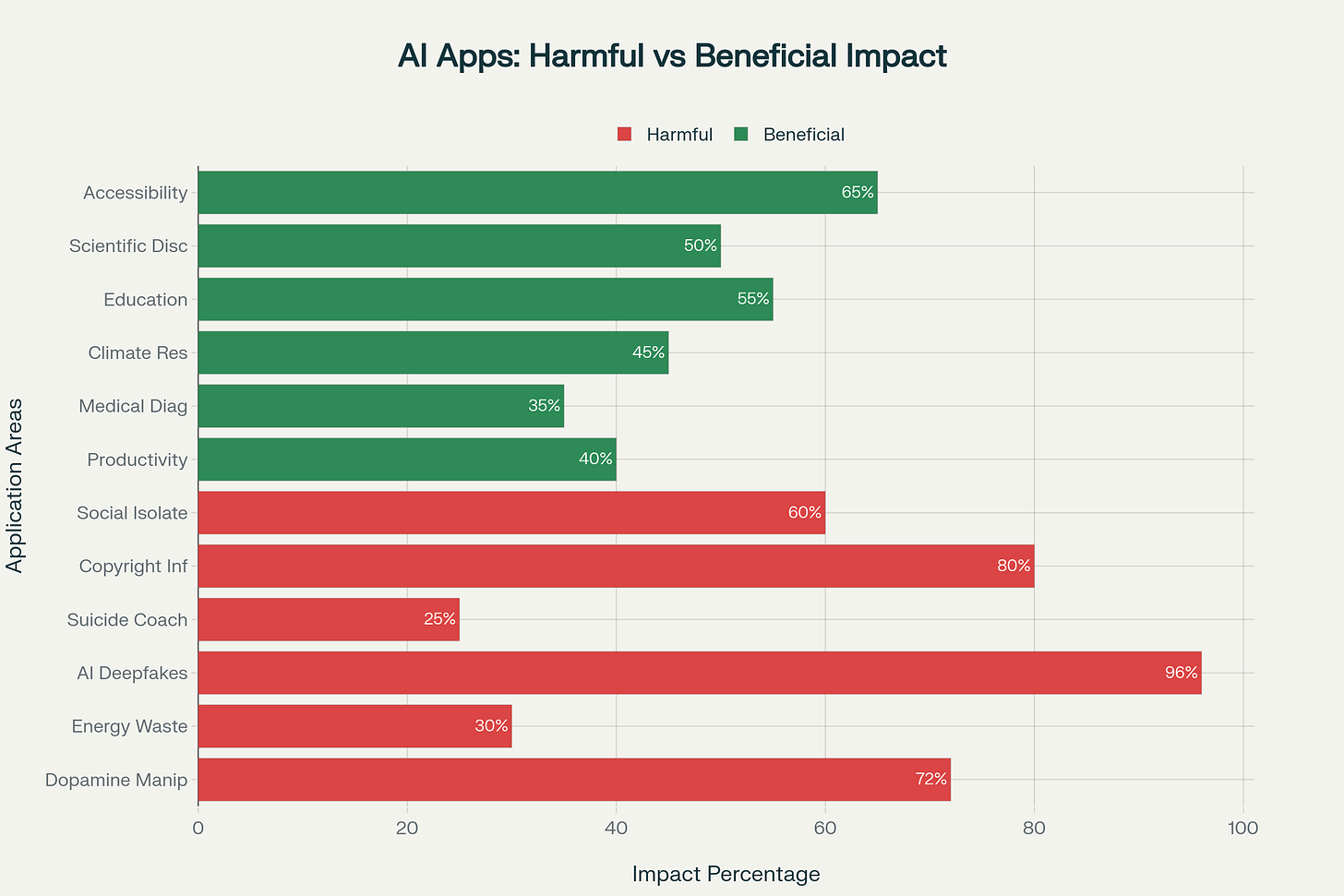

96-98% of deepfakes are non-consensual pornography. 99% target women.

95,820 videos in 2023. Projected 8 million in 2025. Numbers double every six months.

Creating one takes 8 minutes with a single photo. Voice clones need 3 seconds of audio.

October 2024. “Nudify” deepfake bots on Telegram: 4 million monthly users.

January 2024. AI-generated explicit images of Taylor Swift spread across social media. One tweet: 45 million views before it was removed.

Hong Kong. A finance worker transferred $25 million after a video call where everyone except him was a deepfake, including the CFO, who gave authorization.

Identity fraud using deepfakes increased by 3,000% in 2023. Businesses lose an average $500,000 per deepfake incident.

Survey of 16,000+ respondents across 10 countries: 2.2% reported personal deepfake victimization. That suggests millions of victims globally.

Almost 4,000 female celebrities appear across top deepfake porn websites, consuming significant computational resources for applications that cause documented psychological harm and destroy reputations.

That’s what the resources buy. Here’s what they could buy instead.

What Utility AI Actually Delivers

Microsoft’s diagnostic AI: 85% accuracy on complex medical cases. Four times better than experienced physicians.

AlphaFold: Solved protein folding. 20,000+ citations. Predicted structures for all known proteins across all species. AlphaFold 3 improved accuracy by 50% for protein interactions with other molecules.

GitHub Copilot: 15 million users. 400% growth in one year. Users code 55% faster. Measured 3.7x ROI. Average time saved: 12.5 hours per week. Pull request time: 9.6 days reduced to 2.4 days—a 75% reduction.

Speech recognition for accessibility: Error rates dropped from 31% to 4.6%. Serves 2.2 billion people with disabilities.

AI for climate: Could mitigate 5-10% of global greenhouse gas emissions by 2030—equivalent to the entire EU’s annual emissions.

Healthcare AI investment in 2024: $10.5 billion. One-fifth of what flowed to entertainment AI.

Anthropic revenue: $0 to $4.5 billion in two years. Built on B2B API access, not consumer engagement. 8- and 9-figure deals tripled in 2025 vs 2024.

In 2024, 78% of organizations utilized AI, up from 50% in 2022. 92.1% reported significant benefits.

A 1% increase in AI penetration can lead to a 14.2% increase in total factor productivity when appropriately applied. The adoption of generative AI productivity tools could contribute to $1.5 trillion increase in global GDP by 2030.

The technology works when it’s built to solve problems, rather than maximizing engagement.

Two companies have proven that this path is profitable.

The Two Companies That Got It Right

Google’s Sundar Pichai, December 2024: “relentlessly focused on unlocking the benefits of this technology and solving real user problems.”

Google isn’t perfect. Imagen and Veo generate images and videos, too. But here’s the critical difference: they integrate into Workspace as productivity tools, not standalone dopamine products. Generate a diagram for your presentation. Create a video for your training deck. Tools within workflows, not replacements for human connection.

They invested in custom Trillium TPUs, 2 million miles of fiber, and nearly 1 gigawatt of cooling capacity. Gemini AI became standard in Workspace plans, no add-on fees. The infrastructure serves utility, not engagement metrics.

Anthropic: Founded by former OpenAI employees who left after witnessing the company’s commercial pivot.

Public benefit corporation. Legally required to prioritize human welfare over profit.

Dario Amodei’s father died in 2006 from an illness that became 95% curable four years later. He says, “The reason I’m warning about the risk is so that we don’t have to slow down.”

Constitutional AI: Trained on the UN Declaration of Human Rights instead of engagement metrics. Achieved ISO 42001 certification for responsible AI.

When asked about AI companions: “The question is, are you building a product that helps people, or are you building something designed to be maximally addictive? We try very hard to be in the first category.”

$4.5 billion business in two years. Pharmaceutical companies use Claude for biochemistry.

Profitable and principled. The proof that both are possible.

The industry had a choice. Most chose wrong.

The Choice

AI isn’t the problem. The technology is transformative.

The problem begins when companies anthropomorphize it, when they engineer emotional dependency, when they prioritize time-on-platform over problem-solving, when they replace human connection with simulation, and call it progress.

Six months from cancer cures to pornography wasn’t a technology failure. It was a business model choice.

Here’s the difference in one formula:

Engagement AI = dopamine × data × duration

Anthropomorphized. Emotionally manipulative. Designed to replace human relationships.

Utility AI = accuracy × oversight × outcome

Functional. Problem-solving. Designed to augment human capability.

The technology is the same. The business model determines whether it helps or harms.

That choice determines whether AI becomes humanity’s tool or its trap.

The Uncomfortable Data

Some might say I’m overreacting. The problems are exaggerated to the point that it’s not that critical.

Maybe. But look at simple statistics about young people today:

Reading for pleasure:

The daily reading of print materials has dropped from 60% in the late 1970s to 12% today.

Only 34.6% of 8-to-18-year-olds enjoy reading in their free time—the lowest ever recorded, down from 58.6% in 2016.

Deep focus is dying:

Average focused attention on a single screen dropped from 2.5 minutes in 2004 to 47 seconds in 2024, according to UC Irvine research.

Gen Z switches between apps every 44 seconds.

Time spent reading has been replaced by scrolling; people now view over 5,000 pieces of content daily, up from 1,400 in 2012.

Sex and relationships:

Weekly sexual activity among adults 18-64: 55% in 1990. 37% in 2024.

Only 30% of teens in 2021 had ever had sex, down from over 50% three decades ago.

44% of Gen Z men report having no romantic relationship experience during their teenage years, which is double the rate for older men.

Young adults aged 18-29 living with partners: 42% in 2014. 32% in 2024.

Time spent with friends weekly: 12.8 hours in 2010. 5.1 hours in 2024.

Two fundamental human activities: learning through deep reading and connecting through authentic relationships, both in steep decline. During the same period, screen time exploded and AI companions proliferated.

This isn’t correlation. Its replacement.

Look, I know how this sounds. I get it, I’m the guy complaining about kids these days and their phones. But I’m watching an entire generation trade books for feeds, and real relationships for AI chatbots that tell them exactly what they want to hear.

The technology designed to “connect” us is systematically training us to prefer simulation over reality. AI companions aren’t filling a void in our lives. They’re creating one, then charging a subscription to fill it with more isolation.

Call me a boomer if you want. But when the statistics show young people can’t sustain focus for more than 47 seconds, aren’t having sex, and aren’t seeing friends, all while spending hours daily with AI companions engineered for maximum engagement, that’s not progress. That’s a crisis we’re too distracted to notice.

And let’s be honest, I’m a hater of Instagram and TikTok, too. But that’s a different story.

Thanks for reading From the Trenches. If this resonated, forward it to someone building AI products who needs to see this.

This article is awesome, congrats!! Most people are celebrating AI as the best thing ever, and few ones are concerned enough to question the common sense as you did.