The AI Silicon Tax: How Your RAM Got 3x More Expensive While You Weren’t Looking

A few weeks ago, a friend pinged me about upgrading his PC. “Dude, what happened to RAM prices? I’m looking at $270 for a 32GB kit that was $93 six months ago.”

I’m not a PC guy; I’ve been a MacBook user for the last 10 years, and I have a PlayStation 5 that I haven't turned on for the last 8 or so months. So I had no clue what was going on with PC pieces at all.

I knew about GPUs. Everyone who survived the crypto mining era remembers paying 4x MSRP for graphics cards that sat on scalpers’ shelves. But RAM? That was news to me.

So I dug in. What I found is a story about how AI’s insatiable appetite for silicon is quietly reshaping the entire consumer hardware market, and it’s worse than the crypto days in ways most people don’t see coming.

The Numbers Nobody Is Talking About

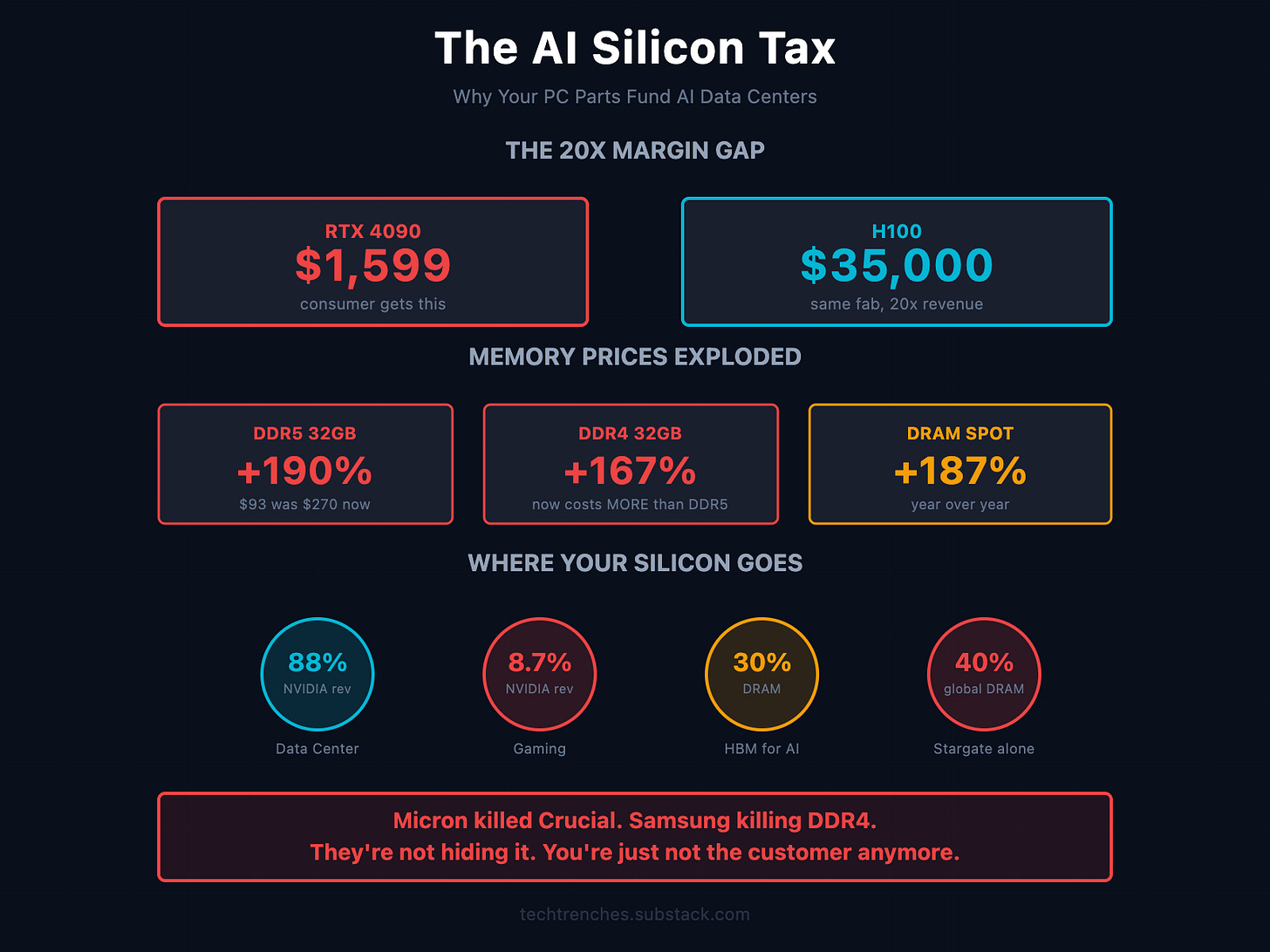

Let’s start with what happened to memory:

G.Skill Trident Z5 NEO DDR5-6000 (32GB): was $125, now $270 (+116%)

TeamGroup DDR5-6000 (32GB): was $93, now $250 (+169%)

Generic DDR4-3200 (32GB): was $90, now $240 (+167%)

DRAM spot prices are up 187% year-over-year. That’s not a typo. Memory is now appreciating faster than gold.

Here’s the kicker: DDR4 is now more expensive per gigabyte than DDR5. The “budget option” for people with older motherboards costs more than the new standard. That’s not how technology is supposed to work.

Why Your PC Parts Are Funding AI Data Centers

The explanation is brutally simple: manufacturers make more money selling to AI companies than to you.

An NVIDIA H100 data center GPU sells for $25,000- $40,000. An RTX 4090 sells for $1,599. Both use similar TSMC production lines. Both require similar die sizes.

The revenue-per-wafer difference? 10-20x higher for AI chips.

When you can sell the same silicon to Microsoft for 20 times what a gamer will pay, the allocation decision makes itself.

NVIDIA’s numbers tell the story:

Fiscal Year Data Center Revenue Gaming Revenue DC % of Total FY2022 $10.6B $12.5B 39% FY2025 $115.2B $11.35B 88%

Gaming went from half of NVIDIA’s business to 8.7% in three years. They’re not a gaming company anymore. They’re an AI infrastructure company that happens to still make graphics cards.

The Memory Manufacturers Are Abandoning You

This isn’t just about GPUs. The memory situation is arguably worse because manufacturers are actively walking away from consumer products.

Micron, one of the three major memory producers, announced in December 2025 that they’re completely exit the Crucial consumer brand by February 2026. Their official statement: they want to “improve supply and support for larger, strategic customers in faster-growing segments.”

Translation: we can sell HBM to AI companies for massive margins, so why bother with your gaming rig?

The technical economics explain why. HBM (High Bandwidth Memory) for AI chips:

Uses 35-45% larger dies than equivalent DDR5

Consumes 2.5-3x more silicon per bit

Has 20-30% lower yields

Takes 1.5-2 months longer to produce

Despite all that inefficiency, the margins are so much better that Samsung is tripling HBM production while phasing out LPDDR4 entirely.

HBM went from 14% of total DRAM production in 2024 to nearly 30% in 2025. Projections show it capturing 50% of DRAM market revenue by 2030.

The Stargate Deal

On October 1st, 2025, Sam Altman flew to Seoul and signed letters of intent with Samsung and SK Hynix—the two companies that together control 70% of global DRAM and 80% of HBM production. According to Bloomberg and Reuters, the deal targets 900,000 DRAM wafer starts per month for OpenAI’s Stargate project.

Global DRAM capacity is roughly 2.25 million wafers per month. OpenAI just locked up 40% of it.

Here’s the detail that should concern you: they’re not buying finished memory modules. They’re buying raw wafers—undiced, unfinished silicon. They’re stockpiling capacity itself.

The panic that followed was predictable. Lead times for new DDR5 orders stretched to 13 months. Japanese retailers implemented purchase limits. Sony stockpiled GDDR6 during the summer price trough—that’s why they can afford Black Friday discounts. Microsoft didn’t secure supply in advance. Xbox prices may rise again.

GPU makers are already canceling products. AMD’s RX 9070 GRE 16GB is reportedly cancelled. Nvidia’s SUPER refresh pushed to Q3 2026—if it happens at all.

This Is Different From Crypto

The crypto mining crisis was chaotic but temporary. Scalpers bought cards, marked them up, and eventually demand crashed when crypto prices fell. The supply chain itself wasn’t fundamentally altered.

The AI shift is structural. Manufacturers aren’t just responding to temporary demand—they’re redesigning their entire business models around datacenter customers.

NVIDIA CFO Colette Kress said it explicitly: “Gaming revenue was down 22% sequentially due to supply constraints.”

They’re not trying to hide it. They’re constrained because they’re choosing to allocate production to AI chips that generate 10x the margin.

AMD tells the same story. Gaming operating margins collapsed to just 2% in Q3 2024 while datacenter surged 122% year-over-year. Their response? Senior VP Jack Huynh announced AMD is abandoning the high-end GPU market entirely:

“If I tell developers I’m just going for 10 percent of the market share, they just say, ‘Jack, I wish you well, but we have to go with NVIDIA.’”

So NVIDIA has 94% discrete GPU market share, no competition above $600, and no incentive to prioritize consumers.

The Real Winners (And It’s Not You)

Here’s what I hate admitting: for enterprise, this is all good news.

I see it every week working with clients at NineTwoThree. Two years ago, a simple AI pipeline with 2-3 API calls would cost serious money. Today? We’re building complex multi-step workflows with 5-7 model calls that cost less than those basic pipelines did in 2023. Context windows went from 4K to 200K tokens. Inference costs dropped 10x.

Cloud GPU prices are falling too. AWS cut H100 instance pricing by 45%. Lambda Labs offers $2.99/GPU-hour. The arbitrage exists; rent cloud GPUs for bulk processing instead of buying hardware, and the math sometimes works.

But consumer hardware doesn’t have this competitive pressure. NVIDIA has 94% market share. No one is undercutting them on RTX cards. Cloud has alternatives. Your next PC build doesn’t.

Corporations negotiate bulk pricing with dedicated account managers. You pay retail in a market no longer optimized for retail customers. Next time you see a headline about cheaper AI API costs, remember: someone’s paying for that optimization. Check your PC part picker cart. It’s you.

The Uncomfortable Truth

We’re witnessing the same pattern I’ve written about in Big Tech’s $364 billion infrastructure bet: the industry has chosen the most expensive solution possible.

Instead of optimizing AI models, they’re buying every chip on the planet.

Instead of engineering efficiency, they’re throwing silicon at the problem.

Consumers, gamers, content creators, small businesses, and anyone who needs to build a PC are paying the tax.

The irony is brutal: the companies promising AI will revolutionize productivity are making basic computing more expensive for everyone else.

This isn’t going to fix itself. The margins on AI infrastructure are too good. The demand is too high. The CHIPS Act capacity won’t come online for years.

If you need to build or upgrade a PC, the best time was six months ago. The second-best time is before Q1 2026, when memory prices are projected to climb another 20%+.

Welcome to the AI silicon tax. You’re already paying it.

What’s your experience with hardware prices lately? Have you delayed builds or upgrades because of cost increases? Reply and let me know.

If this resonated, forward it to someone planning a PC build who needs to see these numbers before they shop.

Subscribe for weekly insights from the trenches. Real problems, practical perspectives, no corporate optimism.

The Stargate wafer deal is the detail most people are missing. Locking up raw undiced capacity means this isnt a demand spike but a fundamental reshaping of supply chains. I've seen similar patterns in enterprise procurement where manufactuers redesign around high-margin customers. Once the tooling and relationships shift, returning to consumer markets becomes economically irrational even when AI demand cools.